The False Promise of Driverless Cars

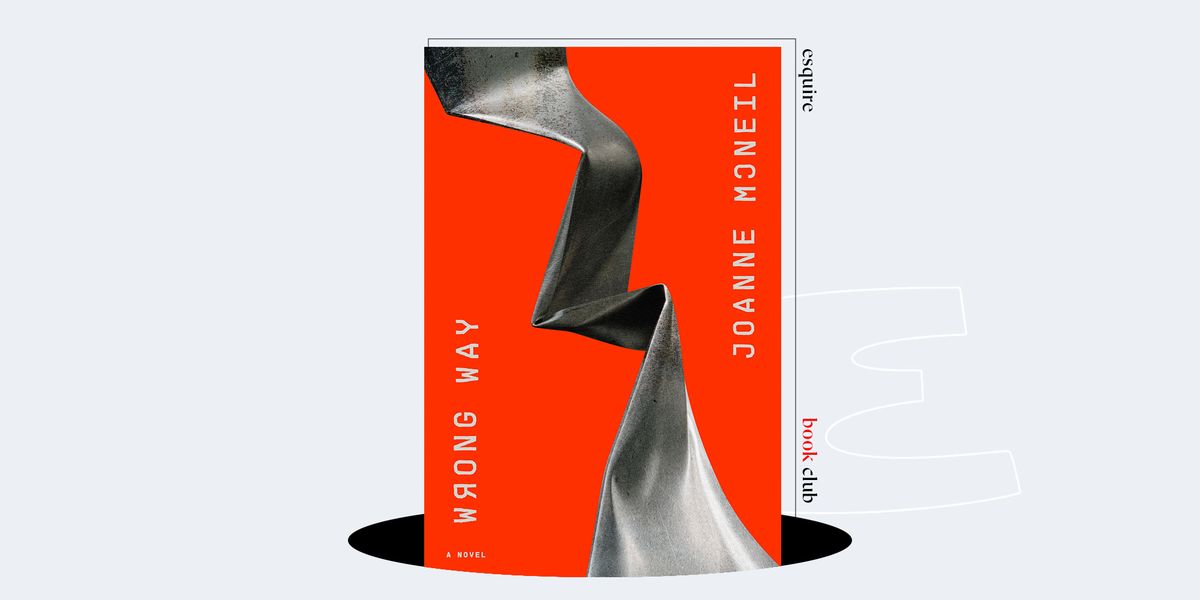

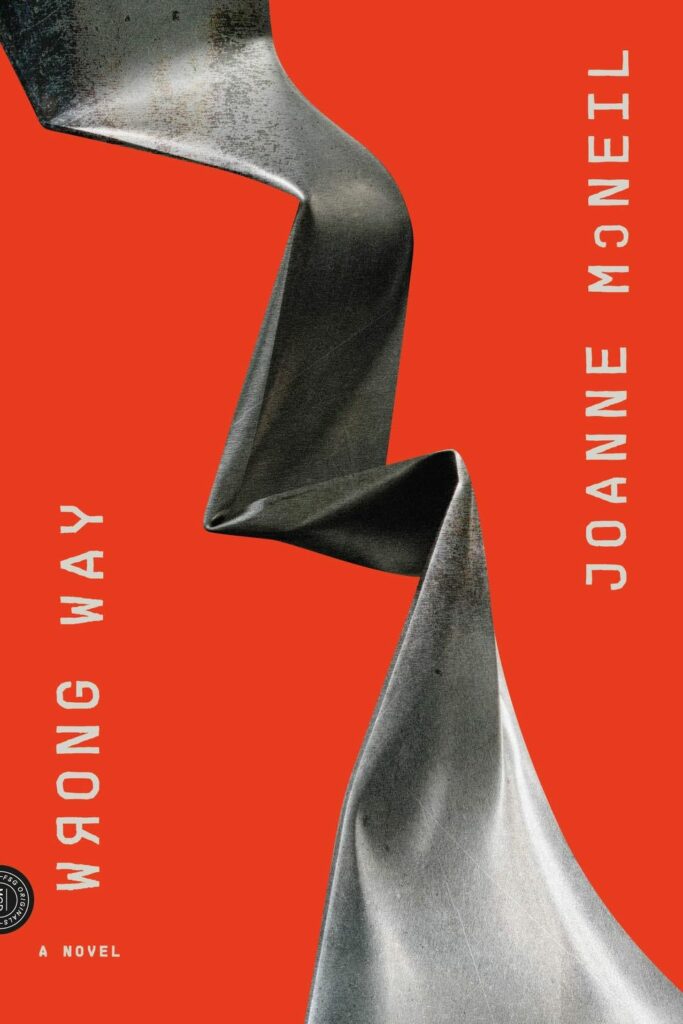

Joanne McNeil, the author of the thrilling new satire Wrong Way, discusses the hollow promises and ethical quagmires at the heart of the industry

WHATS GOING ON underneath the hood of the self-driving car industry? According to Joanne McNeil, the tech critic behind a thrilling new industry satire, the harsh reality is a whole lot of “bizarre and usually non-functional technologies that don’t deliver on their promises.”

The author of Lurking makes her blistering fiction debut with Wrong Way, a closely observed novel about how individual lives are shattered by Big Tech’s hollow promises. After years scraping by in the gig economy, Teresa’s life changes forever when she’s hired for a mysterious new role at AllOver, a tech behemoth billing itself as “an experience company.” The sizeable paycheck is seductive, as is the bombastic sales pitch from AllOver: “You are getting paid to learn a trade that, to the rest of the world, hasn’t been invented yet. You’re a VIP with a backstage pass to a new career.”

There’s just one problem. AllOver’s new rideshare service boasts driverless cars (called “CRs”), but the reality is more sinister—instead, contractors like Teresa operate the vehicles while hiding inside a secret compartment. Clinging to the job for financial security, Teresa drives around the clock, witnesses disturbing incidents inside her vehicle, and loses herself in the ouroboros of AllOver’s bureaucracy. Wrong Way is a chilling portrait of economic precarity, and a disturbing reminder of how attempts to optimise life and work only leave us all more alienated than ever before.

McNeil Zoomed with Esquire from her home in Los Angeles to discuss the rise of AI, the uphill battle of life under capitalism, and the gap between Silicon Valley’s words and actions. This interview has been edited for length and clarity.

ESQUIRE: You wrote in your newsletter, “[Wrong Way] is a book I began writing in 2018 and had to put aside when the pandemic happened because I had a vision of the near future—when it’s set—and I stopped believing in it.” How did that moment of questioning change the novel?

JOANNE MCNEIL: The big shift was Covid, of course, but a consequence of Covid was that Boston rents plummeted in the summer of 2020. It was a strange development for a city in a terrible housing crisis. No one was moving to Boston, and the rush of renters that landlords could ordinarily count on wasn’t happening, so suddenly you could find a beautiful studio apartment for $1200 like it was 1995. In Wrong Way, part of the situation Teresa is going through is dealing with the cost of living in Boston. I had trouble writing those sections knowing what was happening in that moment, even though intellectually I understood that this was just temporary. Still, it impacted my own conviction in believing the world I was writing about. It took more than a year to get it back. I finished a very terrible draft of Wrong Way in fall 2019. My plan was after my book tour for Lurking, which came out in February 2020 and therefore didn’t happen, I would get back to revising and finish the book in 2020. In fact, I ended up taking more than a year to get back to it. I axed many chapters and many characters. I really streamlined the book.

I love hearing you talk about the hyper-locality of Boston. In one memorable scene, when Teresa dines in a long-running family-owned restaurant, she asks how the neighbourhood is so resilient, then learns that the family doesn’t even live there—they commute in from another town and get help on the mortgage. Long commutes and housing insecurity are such big parts of the architecture of this story. Why was it important to you to show Teresa constantly traveling on the commuter rail and bouncing around between places to live?

Part of the reason I wrote about Boston is because I grew up outside the city. It’s a place I know better than anywhere in the world. When I first started writing this book, the location was vague, but I needed some tension of reality to push back against the more fanciful and satirical elements of the story. As an experiment, I changed the locations to spaces that I remember from growing up. As for the train, commuter rail culture is huge. In the suburbs of Boston, there’s no efficient way into town—it’s actually quite time-consuming to commute. If you live in these towns, you’re often driving to the train, which doesn’t run consistently, so there’s a lot of friction in getting around. Boston struck me as an ideal setting because of how strange it is, even within the United States. It’s a town with a distorted view of history, which is not a very cool history, and not a great history, either. I live in Los Angeles now, where there’s this aura around Old Hollywood, but in Boston, it’s Paul Revere. Being able to distort that history, and to parody how it’s celebrated in a very misleading way—it felt interesting to put that in conversation with Silicon Valley’s way of distorting history.

One of my favourite things about Wrong Way are the moments where you slip into the absurd, bombastic rhetoric of Big Tech. I had to laugh when you had the CEO of AllOver saying, “I put forth to the public this, an omni-solution, the CR. A green car, a privacy machine, an end to misinformation, a pledge for justice, rights, and a world in which everyone is your neighbor, your friend, your brother.” How did you find and inhabit that voice?

Silicon Valley billionaires talk about a world beyond capitalism—that’s because if their politics is limited to slogans as opposed to actual actions, they can say anything. There’s a murkiness of their own intentions and their eagerness to please the public at any cost. I noticed that this could even include Silicon Valley billionaires who say things that could even sound like Democratic Socialists of America slogans. I wouldn’t put it past these guys to start tweeting, “Every billionaire is a policy failure.” They can say it because they’re not going to do anything about it. I was pleased to see that you interviewed Naomi Klein; her book really nailed how there’s this friction between words and actions in the public sphere.

There’s the blatant dishonesty, too. It’s pretty rich to describe the CR as “a privacy machine” when someone is spying on passengers from within.

One of the AllOver executives calls the CR “driverless, not self-driving,” saying that there’s a difference. I came across a real company where the cars are remote-piloted, but they’re advertised as “driverless.” Some of the rhetoric I wrote was exaggeration, but some of it is coming true.

Tell me more about your research. What did you encounter that was surprising and outraging to you?

When I started the book in 2018, my guess was I’d have enough time to complete the book before the technology would be delivered to the public. I followed developments with Waymo especially. A great deal of AI has improved rapidly over the past decade, but the uncertainty of being on the road is something they continually fail to address. About this time last year, right as the book was getting in to the “no take-backsies” stage, I went to San Francisco, where I saw self-driving cars on the road everywhere. I started to feel very nervous that maybe the technology had advanced beyond what the book describes. I told myself, “Well, maybe that’s just speculative fiction. Some technologies are available and as a writer, you can imagine a world alt-history style.” Another technology that I wrote around was that there’s no Apple Pay in this world—that was intentional.

I made it a point to visit Phoenix, where so many of the Waymos are available as robotaxi ride services. The week I got there, it rained every day. It didn’t rain very much from my Northeasterner perspective—it was just a drizzle, but it was enough that every single Waymo I booked had a human driver in the driver’s seat, steering the car and working the pedals. To someone walking down the street in Phoenix, they would recognise this Waymo as a self-driving car, but in fact, an actual human was behind the wheel. Unless someone was in the car, as I was, they wouldn’t know that this car was being driven by a human—they would think it’s just a safety driver there to observe, or they might not see the driver at all. This telegraphs an expectation to the rest of the road that these companies have solved the problem of driving through the rain when they absolutely have not.

Just this past week, there was a huge story in the New York Times about Cruise, showing how reliant these vehicles are on their remote operator workforce. What they’re doing is still not fully public, but my understanding is that they can intervene remotely. There’s enormous inefficiency and risk involved there. Would anyone feel safer in AV technology on the road, knowing how often someone is, in fact, interacting and perhaps intervening? Does that make anyone feel safe? I certainly don’t; I felt safe with the driver in a car with me, but not with someone in a call centre.

To me, this speaks to the cancer of optimisation at all costs. Perhaps cars shouldn’t be driverless!

There are so many ways that this technology could be implemented in a beneficial way. Coming to California from New England, it’s been a change to drive on these streets, which are enormous. Trying to take a left turn on a quiet street across six lanes of traffic… I look left, I look right, then I want to look left again, and I still don’t feel safe. That’s when I’d love to have technological assistance, with the technology assisting me, as opposed to the car driving itself. Unfortunately, that’s a sensible technology that isn’t sexy to investors, which is why we end up with these bizarre and usually non-functional technologies that don’t deliver on their promises.

Teresa witnesses a disturbing incident of sexual violence between passengers in her CR, but to report the incident, she has to jump through an absurd obstacle course of customer service numbers. After months of fruitlessly calling around, it’s a minor miracle that she still cares at all. How do the systems of capitalism and Big Tech distance us from our real, ethical, compassionate selves?

I wanted to dramatise something that many of us grapple with every day: the exhaustion of having a job, having to survive in a city, having to meet all of our actual needs as human beings in the world, while also wanting to give back to the community and wanting to have your own sense of ethics. What Teresa is trying to achieve is so small, but at the same time, there are no simple procedures to make that change. At the point at which this is happening, she’s falling into routines that are familiar to many of us. If you have a stressful day at work and work happens to be twelve hours of your day, do you have that extra inch within you to give back somehow? The guilt that many of us feel when we don’t is terrible. My sense of uncertainty about this is why I had to turn to fiction to express that.

There are times that you can absolutely give more, and there are times where it’s just not possible. What does that line mean for a person? How much can be expected of us? Teresa has so little and her goals are so modest. It was important to me to show someone who’s not driven by success or wealth. Her understanding of a good life is incredibly modest, and trying to secure it is difficult enough. I hoped that would be relatable to readers. Too often in fiction, I see plots about winning and being the best, but I don’t necessarily relate to that. The things that Teresa refuses in life say a lot about who she is and her values.

This book arrives at a fateful moment amid a cultural panic about the growth of AI. Something I’ve always admired about your work is your focus not just on how technology is changing the world, but how it’s changing us, as human beings. As you’ve watched this AI panic play out over the past year, how do you think AI is changing us?

I’m encouraged by the pushback to AI, which feels a little bit new. At this stage, companies like OpenAI are coming to the public after a broader reckoning about Big Tech in society, which means on an interpersonal level, there was healthy skepticism. I was thrilled to see WGA and SAG-AFTRA raise AI as a crucial demand. That feels like a breakthrough, because unfortunately on the level of power and governance, it does feel like there’s a lot of pressure to normalise AI. Sam Altman has charmed many powerful people, Republicans and Democrats alike. But artists, writers, and individuals understood the exploitation at play immediately. They looked past rhetoric of “this is fair use” and understood that for this technology to work, it means harnessing the life and labor of other people for nothing, and creating value out of that. I’m less concerned about copyrighted material, because powerful people have lawyers who can possibly fight that, and more concerned about our everyday lives being mined in this way.

When I wrote about this in Lurking, it was so small—I felt a little bit self-conscious about even bringing up AI because it was still unsettled. But I do remember feeling like I had to say something about this growing sense of mass exploitation. Because living in the 21st century means living our lives online, our everyday lives become something of value to these enormous corporations. Maybe it’s just pennies to them, but the fact that they can put a price on you sending an email to your grandmother—there’s something really objectionable about that. I hope that people don’t get used to it, and I don’t think they will. I think this really reveals how as people and as users of technology, there’s a trade-off with every action. If technology is compulsory, that makes it even worse.

I read recently that AI wouldn’t be economically sustainable if companies had to pay for the materials they’re using to train the system. Paying people for labor—what a concept!

Maybe that means your technology isn’t supposed to exist. If you have to exploit people for it to work, isn’t that your answer? It’s the question that so much of social media presents: should these platforms exist if they need content moderators, and if they need to traumatise people in order to provide this allegedly “free” service? It’s not free, because there are trade-offs to participate in it. Should various AI applications exist if they need to exploit not just copyrighted material, but the lives of people going about their business on the Internet?

This book traffics in some very dark themes, but it’s studded with moments of real beauty, too. In this technocapitalist hellscape, where are you finding beauty and hope, these days?

That part of the book, I really pulled from my own life. Even in moments when I feel screwed, I still have friendships and family. I can go for a hike. I can go out in the world. With Teresa, I found it important to show someone who’s still alive in the world, even in moments of great precarity. The misery of precarity is very real, but there’s still a world where we can go for a walk on the trail, go to the park, watch the sunset. That world is still available to us. That’s what Teresa values. Being the best at work, being a high-income individual—none of that matters to her. What matters is having a fulfilling life and enjoying the world.

This story originally appeared on Esquire U.S

Related: